Elon Musk’s X app ran ads on #whitepower and other hateful hashtags

X running ads on 20 racist and antisemitic hashtags more than 18 months after Musk said that he would demonetize hate posts.

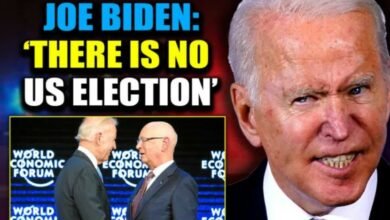

Elon Musk’s social media app X has been placing advertisements in the search results for at least 20 hashtags used to promote racist and antisemitic extremism, including #whitepower, according to a review of the platform.

NBC News found the advertisements by searching various hashtags used to promote racism and antisemitism, and by browsing X accounts that often post racial or religious hatred. The hashtags vary from obvious slogans such as #whitepride and #unitethewhite to more fringe and coded words such as #groyper (a movement of online white nationalists) and #kalergi (a debunked theory alleging a conspiracy to eliminate white people from Europe).

While the hashtags make up a small percentage of what is posted daily on X, they add to previous examples showing how the platform has struggled to maintain control of its ad network and how it intersects with hate speech — an issue that has plagued the platform for years. The placements allow X to monetize extremist content more than 18 months after Musk said that he would demonetize hate posts on the platform he owns.

It’s not clear to what extent people at X were aware that the company was monetizing the extremist hashtags prior to NBC News’ reporting. Twitter, as the platform was then known, began placing ads amid search results around 2010. Last year, some users posted on X that they saw ads amid the search results for #heilhitler. Some of those users tweeted about the issue to X management, meaning the company could have known for months, at least, that it was monetizing certain problematic hashtag searches.

After NBC News sought comment from X on the findings, X appeared to have taken action against at least five of the 20 hashtags that previously had ads, removing the ability of users to search for the hashtags. The other 15 including #whitepower remained searchable.

In a statement to NBC News, X did not dispute the findings but said the company “had already taken action on a number of these terms and will continue to expand our approach as necessary.” The statement also reiterated the company’s intention, announced in January, to open a 100-person “center of excellence” for content moderation.

The statement, sent via email, said the NBC News findings did not reflect the full extent of the company’s enforcement practices, including around violent content.

“X has clear rules in place relating to violent and hateful speech, and robust protections in place for advertisers,” X said in the statement. “One of our enforcement tools is to limit the reach of posts, which is not reflected in this research.”

Representatives for X did not answer emailed questions about how it decides which hashtags to demonetize or block entirely.

On Thursday, after NBC News published this report, X said in a post on one of its corporate accounts that it had rolled out a change last month to greatly limit ads in search results. The company said it now maintains a list of “brand-safe and commercially relevant search terms” and allows search ads only alongside those terms or terms chosen by advertisers.

X bans the promotion or glorification of violence and has previously applied the policy to racist and antisemitic content. In April, an NBC News report found that at least 150 paid X subscribers at the time were sharing pro-Nazi content, including speeches by Adolf Hitler. Being a paid subscriber of X allows users to potentially enroll in X’s revenue sharing program, which gives creators a cut of ad dollars generated from their content.

Anika Collier Navaroli, who worked at Twitter as a senior content policy expert before leaving in 2021, said that X could block or demonetize specific hashtags like #whitepower if it wanted to without compromising its free speech principles.

“There’s no freedom to trend, or populate at the top of search, or be recommended to new people. These are not freedoms,” said Navaroli, now a senior fellow at the Tow Center for Digital Journalism at Columbia University.

Musk has rolled back much of the content moderation on X since he bought the app, then known as Twitter, in October 2022.

X has lost dozens of major advertisers under Musk’s ownership, with 74 out of the top 100 U.S. advertisers from that month no longer spending on the platform as of May, according to research firm Sensor Tower. At least some of those former advertisers, such as Disney, quit X after reports about antisemitism and other forms of hate on the platform, including in posts by Musk himself. Musk, the CEO of Tesla and SpaceX, created a firestorm in November when he embraced the concept of the “great replacement,” which says there is a conspiracy to replace the white population with nonwhite people.

In April, Hyundai paused spending on X after a sponsored post from the automaker appeared next to antisemitic and pro-Nazi posts. The company said Tuesday it had not resumed such spending.

Because hashtags are used to amplify the reach of content, they can be ripe for abuse, and for years have been a significant battleground for content moderation as tech companies such as Meta and TikTok decided which hashtags to demonetize or restrict.

Social media apps have various ways to restrict hashtags, including blocking them from searches and removing them from auto-complete functions. Last year, X blocked searches for hashtags associated with child sex abuse material after NBC News reported that certain terms served as rallying points for people seeking to trade or sell the illegal material. At the time, an X representative said the company was using automated detection models to compile a list of thousands of hashtags that could violate the company’s policies.

Before Wednesday, X appeared to have blocked at least three antisemitic hashtags, according to searches of the platform. A search for those hashtags produces an error message: “Something went wrong, but don’t fret — it’s not your fault.” In all, NBC News reviewed 50 hashtags. After NBC News contacted X about the hashtags, 12 of 50 hashtags appeared to be blocked.

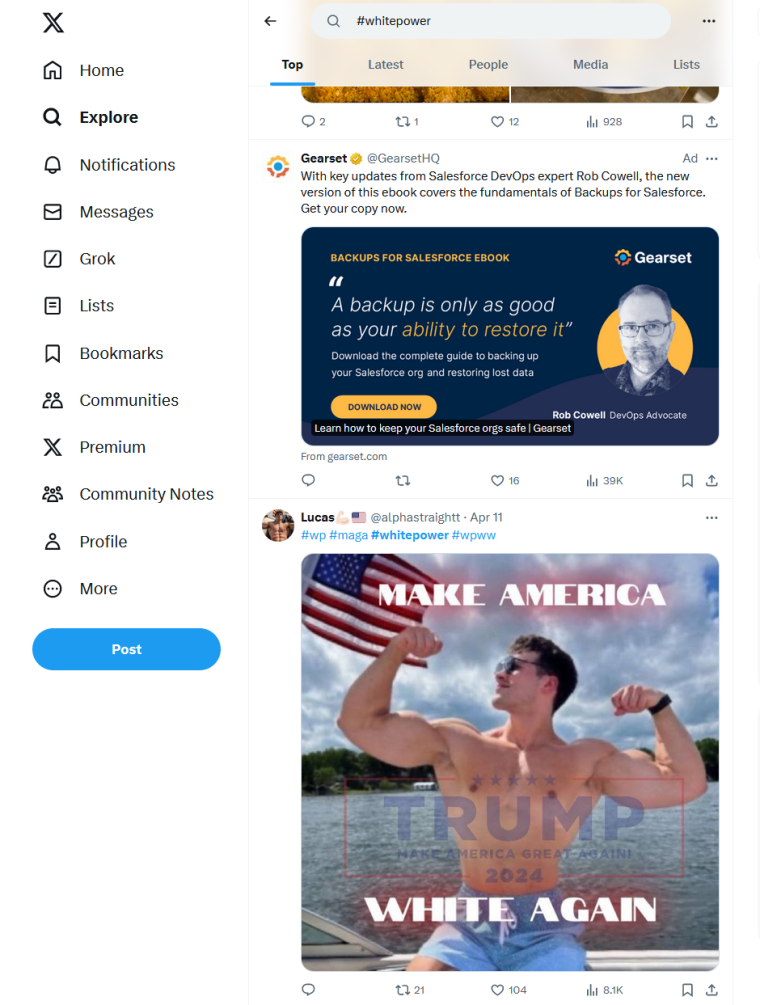

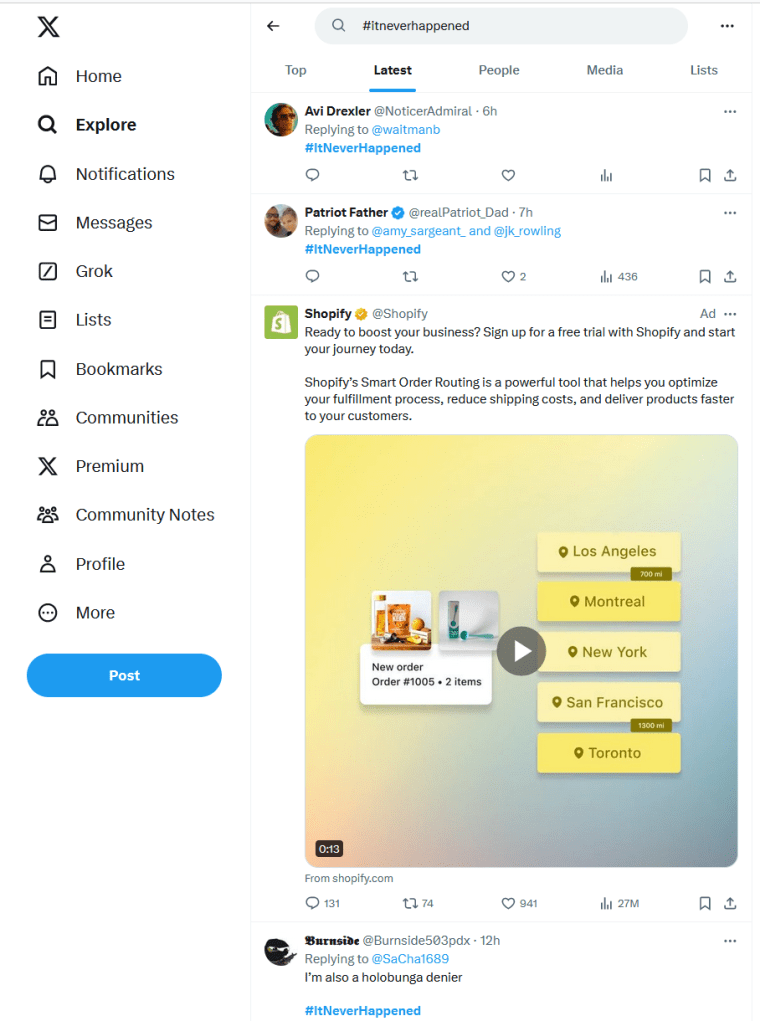

The 20 monetized extremist hashtags on X included six associated with explicit white supremacy, eight promoting antisemitism and six related to anti-immigrant conspiracy theories. Gearset and Shopify, two companies that make business software, and MS, a company that creates music playlists, were among the X advertisers whose ads ran in between the search results for racist and antisemitic hashtags; the three companies did not respond to requests for comment.

Companies don’t necessarily choose the type of content their ads are adjacent to. They can use block lists to try to avoid certain content, though on X’s ad system, they must list keywords one-by-one to avoid them.

Tech companies do not publish their block lists and vary in how often they restrict hashtags to reduce the amplification of hate speech, sexual content or other objectionable material. In 2013, a blogger compiled a list of more than 200 hashtags that Instagram had made unsearchable. Most of them referred to sex or nudity, which Instagram limits while X generally does not.

X is different from rival social media sites in allowing certain searchable hashtags with racial prejudice. Instagram and TikTok do not show users any results when they search for #whitepower or #whitepride — two hashtags that X has allowed and placed advertisements alongside.

Of the 50 reviewed hashtags, searches on X for 45 of them returned recent racist or antisemitic posts. On Instagram, 15 of the 50 hashtags returned racist or antisemitic posts, with the other 35 either blocked or producing anti-racist or unrelated content. On TikTok, 10 of the 50 hashtags returned racist or antisemitic posts, with the rest either blocked or producing other content.

Instagram, which is owned by Meta, discloses that certain hashtags may not be searchable if the associated content “consistently” does not follow the app’s community guidelines. The company says it periodically reviews hashtags under moderation and may revise its decisions based on the content being posted using the hashtags.

Instagram said in a statement that, short of removing a problematic hashtag entirely from search, it may take less restrictive steps such as removing individual posts. Meta has policies that apply to specific hateful ideologies such as Nazism.

Of the 15 hashtags that produced racist or antisemitic content on Instagram per the NBC News review, Instagram made six of them non-searchable by Wednesday.

TikTok described a similar policy and process, saying that it removes or restricts hashtags that violate the app’s rules.

TikTok said that it tailors its approach depending on circumstances, with “public interest exceptions” to leave up some problematic content for educational or satirical reasons.

“We recognize that some content that would otherwise violate our rules may be in the public interest to view,” it says on a website.

Of the 10 hashtags that produced racist or antisemitic content on TikTok per the NBC News review, TikTok made seven of them non-searchable by Wednesday.

Some of the extremist hashtags on X have spiked in popularity in recent months, according to research by Darren Linvill, co-director of Clemson University’s Media Forensics Hub. Linvill, who has researched troll factories and disinformation tactics, analyzed several of the hashtags at the request of NBC News.

In late March, a Holocaust denial hashtag echoing the debunked conspiracy theory that it “never happened” spiked to more than 2,200 mentions in one day after previously getting almost no activity, according to Linvill’s findings. Linvill said the spike appeared to be the result of an organized effort by a group of accounts to get the hashtag trending, with no clear connection to offline events. He said several of the accounts were later suspended.

The hashtag was searchable on X until Wednesday, when it appeared to be blocked. Top posts on that hashtag included images depicting antisemitic stereotypes and memes depicting Holocaust denial.

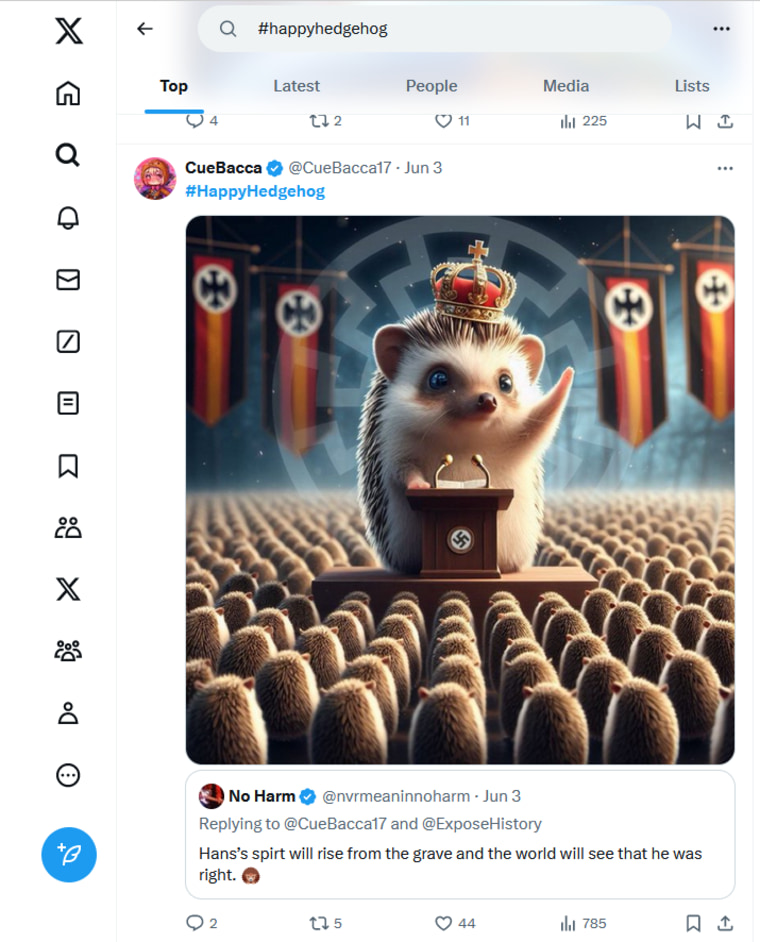

AI appears to be fueling some of the content associated with certain hashtags. In one series of posts, neo-Nazi users have posted AI-generated cartoon hedgehogs with the animal as a stand-in for Hitler. Linvill said use of the associated hashtag spiked this year through early May, with accounts repeatedly posting images of hedgehogs in paramilitary and Nazi outfits holding firearms and giving Nazi salutes. NBC News did not see advertisements in the search results for that hashtag.

Megan Squire, deputy director for data analytics at the Southern Poverty Law Center, an anti-hate group founded in 1971, said that X isn’t living up to its own policies when it allows violent extremists to use the platform’s amplification features.

“It shows that it’s not top of mind and it’s not on anyone’s to-do list for the week,” she said. “It’s a choice.”

She said that X’s approach to violent extremism contradicts its hands-on attempts to be an arbiter of popular culture — attracting presidential candidates and being a hub of discussion for major events.

“They can’t have it both ways,” she said. “If you’re going to have the power to weigh in on cultural issues, then that extends to the responsibility to use that power for good.”